Fixing and Securing HTTPS API Communication Between Dockerized Nuxt and FastAPI Apps Using Nginx

Original article can be found here: https://medium.com/codex/fixing-and-securing-https-api-communication-between-dockerized-nuxt-and-fastapi-apps-using-nginx-71998bdbd33d

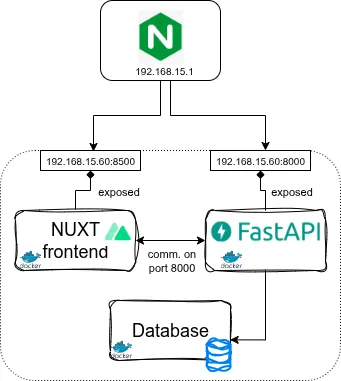

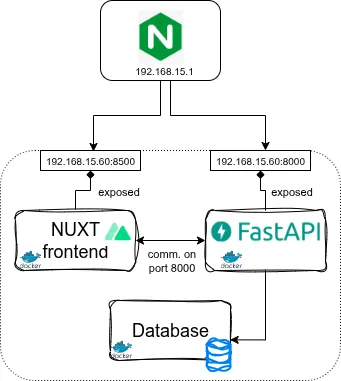

A Typical Modern Web Stack — Nuxt + FastAPI + DB

In many modern web applications, a common and effective architecture combines a JavaScript-based frontend with a Python-powered backend API. The frontend, often built with frameworks like Nuxt.js, handles everything the user sees — the interface, routing, and interactions in the browser. The backend, implemented with frameworks such as FastAPI, takes care of the logic behind the scenes: authenticating users, validating data, serving dynamic content, and communicating with the database.

In this setup, the frontend and backend are usually deployed as separate services, often containerized using Docker for isolation and ease of deployment. The frontend runs as a static or server-rendered app that talks to the backend via API calls — typically over REST endpoints like /v2/auth/login or /v2/data/query.

While this separation offers flexibility and scalability, it also introduces a few challenges, especially when it comes to securely connecting the two components over HTTPS in a production environment. That’s where an Nginx reverse proxy comes in. Acting as a gateway, Nginx can route external traffic to the appropriate service (frontend or backend), manage TLS/SSL termination, and ensure that all communication remains encrypted and seamless to the user.

However, getting that communication right — especially when HTTPS is involved and the services live in different Docker containers — can be trickier than it seems. Subtle configuration issues can cause frustrating problems like mixed-content warnings, blocked API calls, or redirect loops.

In this post, we’ll walk through exactly that kind of issue — using a real-world setup with Nuxt + FastAPI + Nginx, and how we debugged and fixed HTTPS and proxy routing errors to make everything work securely and smoothly.

Initial configuration

In order to expose my application, I simply exposed the NUXT frontend only (unlike to the architecture displayed above). To reach this, I had this Nginx config for the subdomain.

### REDIRECT ANYTHING ON PORT 80 --> 443 sia!

server {

listen 80;

server_name [YOUR_PUBLIC_DOMAIN.tld];

return 301 https://$host$request_uri;

}

server {

#variables - portainer is the docker service name so resulution should work

set $backend http://192.168.15.60:8500;

#server info

listen 443 ssl;

http2 on;

server_name [YOUR_PUBLIC_DOMAIN.tld];

#common ssl settings

include /etc/nginx/ssl.conf;

#mTLS

# ssl_verify_client on;

location / {

proxy_pass $backend;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

access_log on;

access_log /var/log/nginx/access.log;

error_log on;

error_log /var/log/nginx/error.log;

}

For common options on proxy parameters and ssl.conf, you might refer to my ultimate secure Nginx post.

The Problem: Mixed Content Blocking the Login Request

When everything finally seemed wired up and ready, the first serious issue surfaced during a simple login attempt. The frontend — our Nuxt.js app — failed to communicate with the backend FastAPI service. Instead of proceeding to the authentication flow, it threw a network resource fetch error, leaving the login form in a perpetual loading state.

Opening the browser’s developer console quickly revealed the root cause:

Blocked loading mixed active content “http://[YOUR_PUBLIC_DOMAIN]/v2/auth/login”

In other words, while the frontend was being served securely over HTTPS, it was trying to call the backend API over HTTP. Modern browsers block this kind of “mixed active content” by default, since it breaks the secure HTTPS chain and exposes users to potential man-in-the-middle risks.

This mismatch happens surprisingly often in Dockerized environments where services communicate internally over plain HTTP while being proxied externally through HTTPS. In this case, the proxy configuration and environment variables weren’t properly aligned — causing the frontend to call an insecure endpoint even though the public site was fully secured.

Solution

Avoid Hardcoding Internal Container IPs

One critical detail to double-check is how your frontend makes API calls to the backend. In many early setups, it’s tempting to point the frontend directly at the FastAPI service’s internal Docker IP — something like:

http://172.30.1.5:8000/v2/auth/login

However, this approach quickly causes problems once you introduce an Nginx reverse proxy and HTTPS. From the browser’s perspective, requests to a hardcoded internal IP won’t be routed correctly through Nginx, and they’ll also be seen as insecure HTTP calls — leading to mixed content errors like the one we encountered earlier.

The proper approach is to use relative paths instead of absolute URLs in your API calls. For example:

/v2/auth/login

With this setup, all requests from the frontend automatically go through Nginx, which can then route them internally to the FastAPI container while preserving HTTPS encryption externally. This ensures that your frontend remains unaware of private container IPs and that all communication follows the same secure entry point — your reverse proxy.

Routing API Requests to the Backend

Now that the frontend is using relative paths for its API calls, the next step is to ensure those requests are correctly routed to the FastAPI backend. Since our backend lives as a sub-path behind the same main domain, we need Nginx to recognize and forward those calls — such as /v2/auth/login or /v2/data/query—to the appropriate service.

To do this, the FastAPI container must be reachable by Nginx. If Nginx and the application containers share the same host or Docker network, you usually don’t need to expose FastAPI’s port to the public; Nginx can route requests directly to it through the internal network. This arrangement is NOT reflected in the architecture diagram above: the proxy sits in front of both the frontend and backend but on a different machine, securely channeling all incoming traffic.

To make this work, we simply extend the existing Nginx configuration and add a new location block dedicated to forwarding the API sub-paths to FastAPI. This enables the proxy to distinguish between static frontend assets and dynamic API endpoints, ensuring each request reaches the correct container.

location /v2/ {

proxy_pass http://192.168.15.60:8000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

Enforcing HTTPS to Eliminate Mixed Content

With the new routing rules in place, API calls now reach the correct service — but the original mixed content issue still remains. The reason is that while the backend is properly connected through Nginx, the browser may still attempt to load some resources over HTTP instead of HTTPS, triggering the same security block.

To fully resolve this, we need to enforce HTTPS usage and automatic redirection directly at the proxy level. This ensures that any request arriving over HTTP is immediately redirected to its secure counterpart, and the client-side application always communicates through HTTPS.

In practice, this can be achieved by adding just two additional lines to the location /v2/ {} block within your Nginx configuration.

location /v2/ {

proxy_pass http://192.168.15.60:8000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header Host $http_host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto https;

proxy_redirect http:// https://;

}

These lines instruct Nginx to force HTTPS redirection and correctly pass the protocol headers, ensuring all API traffic between the frontend and backend stays within a secure, encrypted channel.

Conclusion

In modern web architectures where the frontend and backend run as separate containerized services, ensuring secure and seamless communication is crucial yet often challenging. This post detailed how common pitfalls — like hardcoded backend IP addresses and missing HTTPS enforcement — can cause frustrating issues such as mixed content blocking and failed API calls.

By employing relative paths for API requests, setting up precise Nginx routing for backend services, and enforcing HTTPS redirects at the proxy level, you can effectively eliminate mixed content errors and provide a smooth, secure user experience. These steps not only safeguard data in transit but also simplify your deployment by centralizing traffic management in Nginx.

With this foundation, your Nuxt + FastAPI stack will communicate correctly and securely, allowing you to focus on building applications without worrying about insecure or broken API flows.